100% in Your Cloud.

100x more efficient Serverless Vector Databases

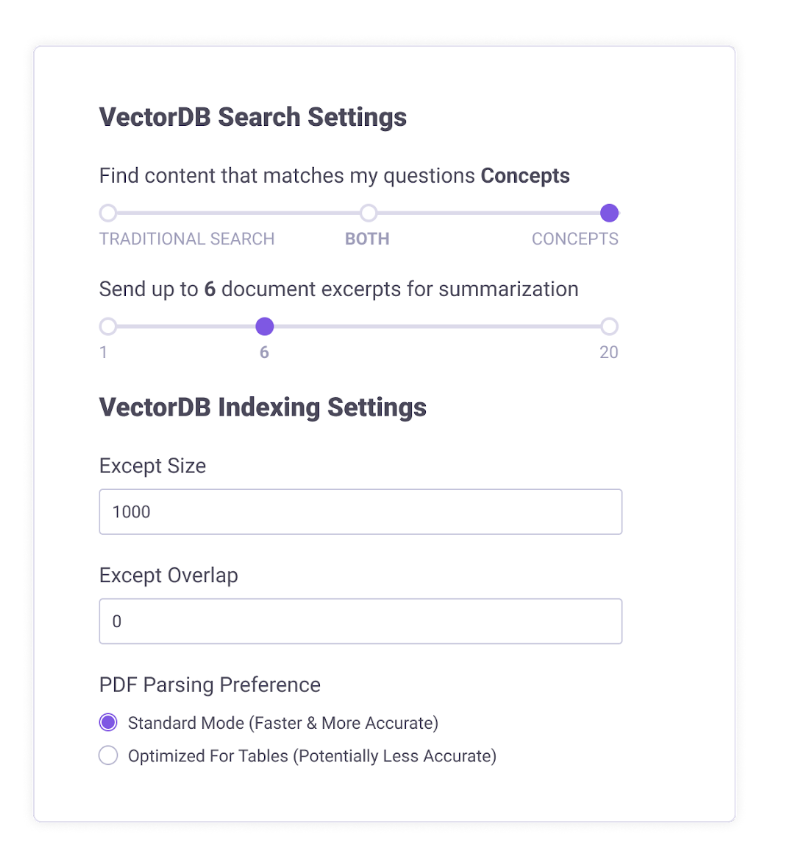

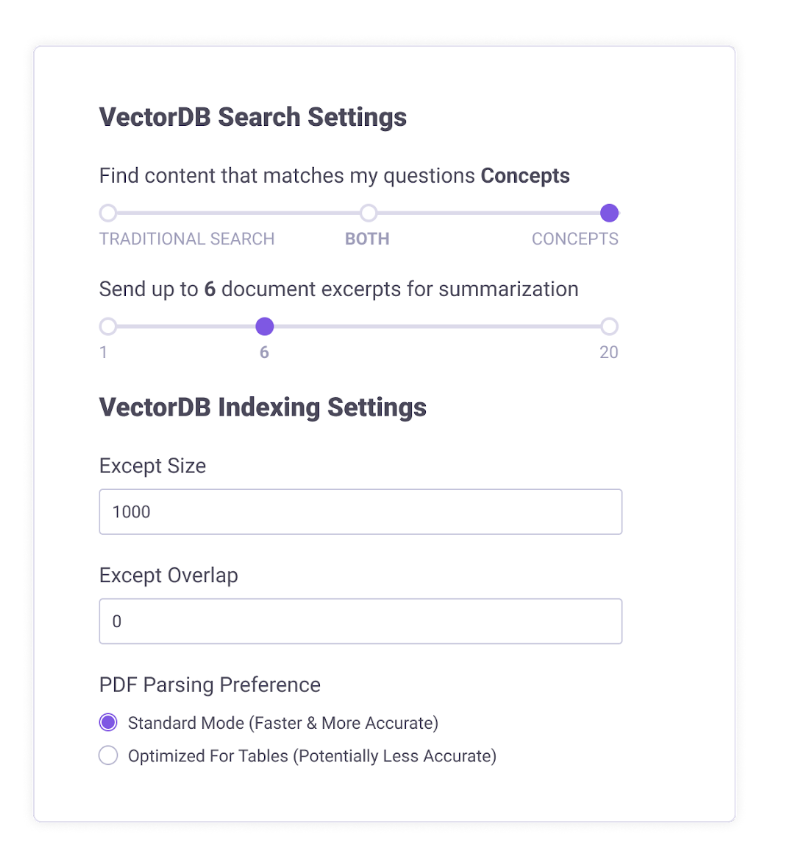

Vector Databases are a key component of Retrieval Augmented Generation (RAG) use cases, but they can be extremely expensive - often eclipsing the cost of the Large Language Model (LLM) for such use cases. Why? Because the Vector Database sticks around, costing you money, whether or not you are actively using it. While some Vector DB vendors have announced serverless versions, these run in a shared environment outside the customers control. Aible now includes a serverless Vector Database built on open source ChromaDB that runs fully in the customer’s own cloud. Because it is serverless, it is 100 times more cost efficient for most use cases. Because it runs fully in the customer’s cloud under the customer’s control, it is more secure. Because it is fully integrated into the Aible experience, users do not need to know any data science skills to use this technology for their genAI use cases.

100x more efficient Serverless Vector Databases

Vector Databases are a key component of Retrieval Augmented Generation (RAG) use cases, but they can be extremely expensive - often eclipsing the cost of the Large Language Model (LLM) for such use cases. Why? Because the Vector Database sticks around, costing you money, whether or not you are actively using it. While some Vector DB vendors have announced serverless versions, these run in a shared environment outside the customers control. Aible now includes a serverless Vector Database built on open source ChromaDB that runs fully in the customer’s own cloud. Because it is serverless, it is 100 times more cost efficient for most use cases. Because it runs fully in the customer’s cloud under the customer’s control, it is more secure. Because it is fully integrated into the Aible experience, users do not need to know any data science skills to use this technology for their genAI use cases.

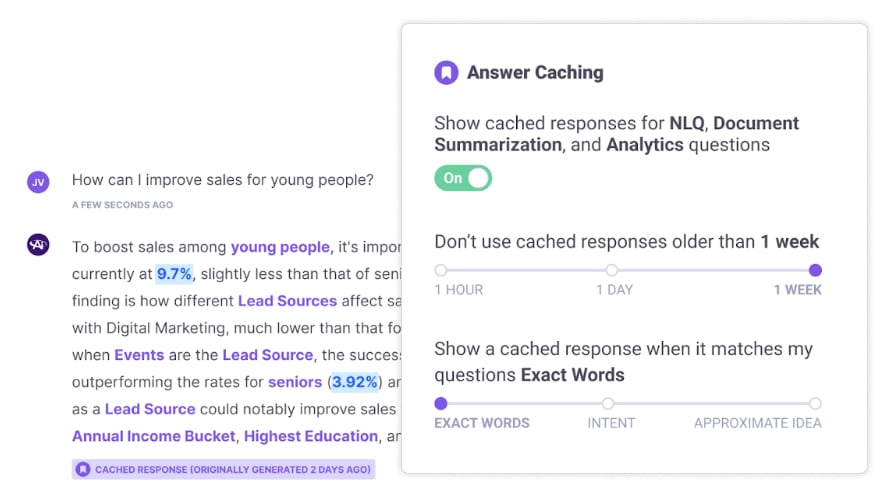

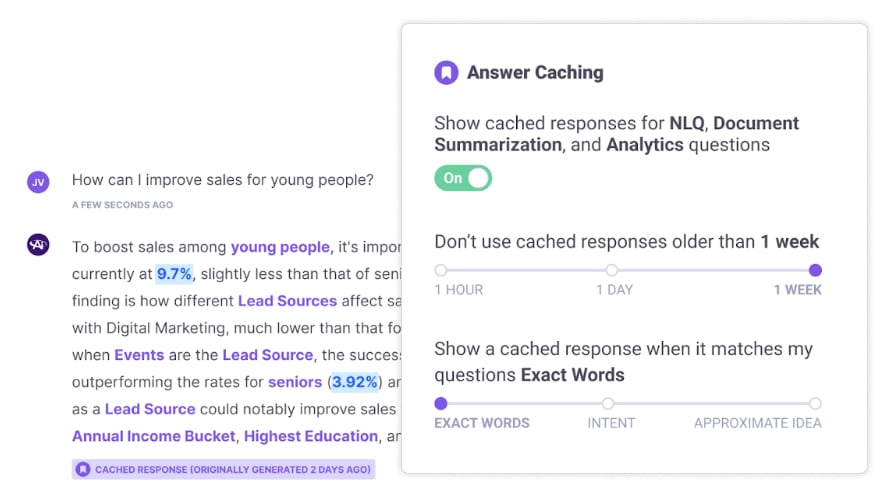

Automated Caching for all types of questions

Our Valentine’s Day release is all about delivering on customer feature requests. Ever since we introduced our comprehensive logging capabilities, customers kept noting duplicative questions from different users in their logs and asked us - Can’t Aible figure out it has already answered this question and avoid incurring additional LLM, Vector DB, and analysis costs?

Automated Caching for all types of questions

Our Valentine’s Day release is all about delivering on customer feature requests. Ever since we introduced our comprehensive logging capabilities, customers kept noting duplicative questions from different users in their logs and asked us - Can’t Aible figure out it has already answered this question and avoid incurring additional LLM, Vector DB, and analysis costs?

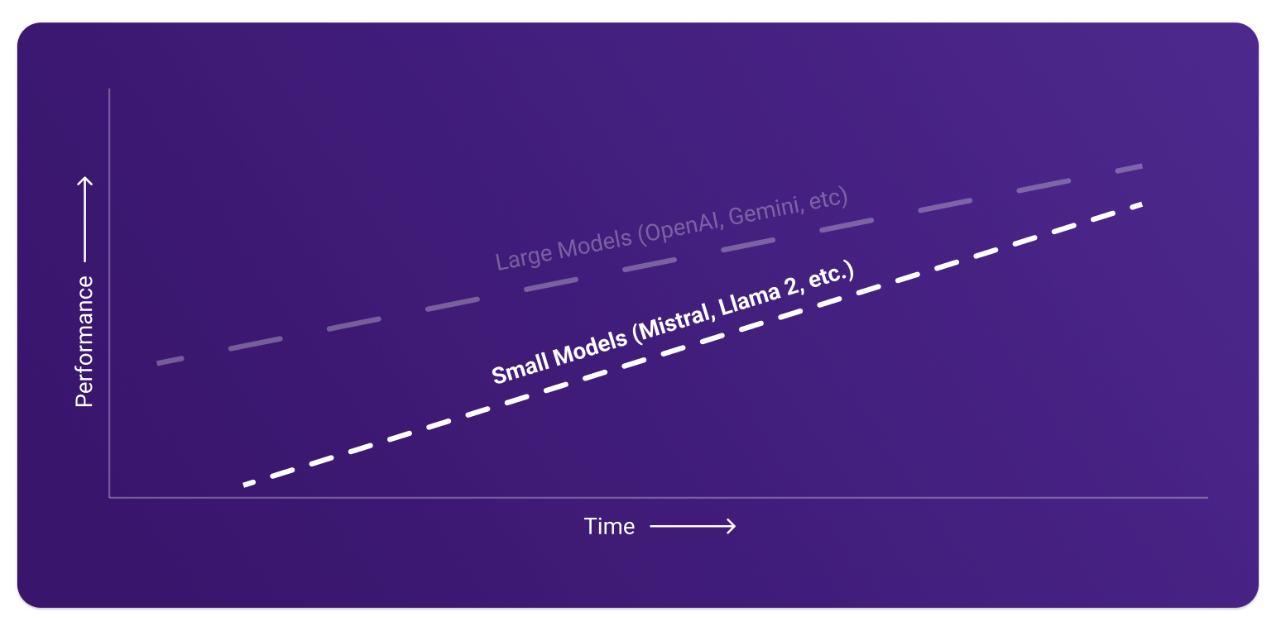

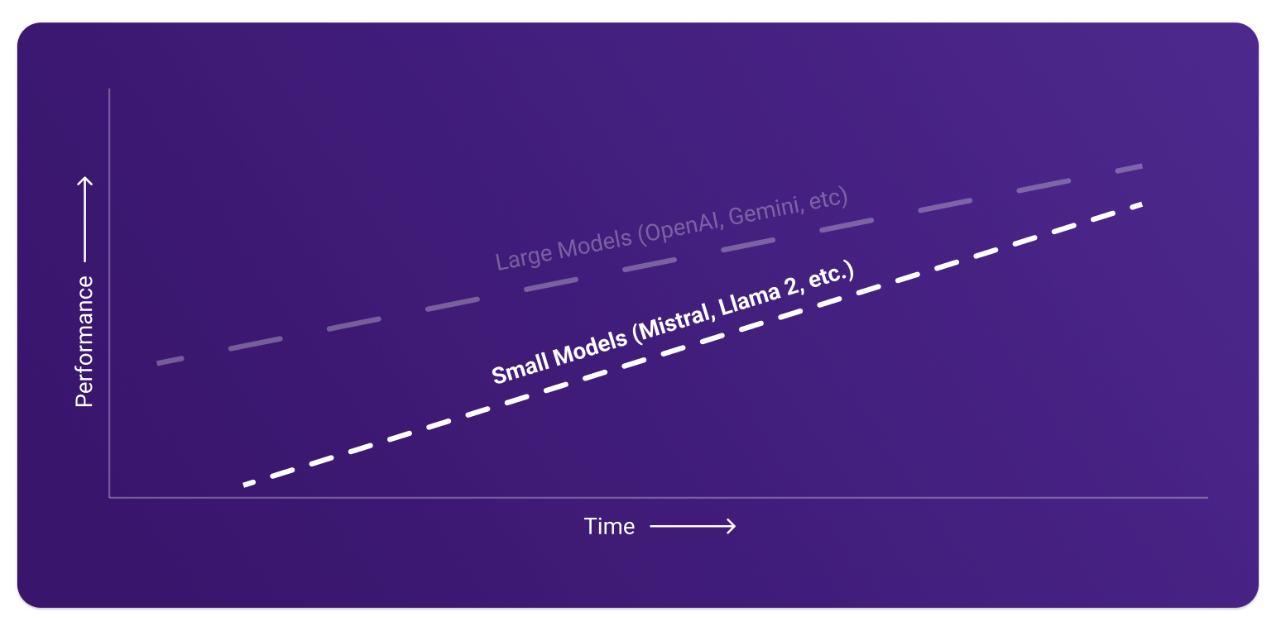

Serverless Small Models that don’t need GPUs to operate

Multiple projects have demonstrated that small models like Mistral, Llama 2, etc., when fine-tuned for specific use cases, perform very well compared to large models like OpenAI and Gemini.

Serverless Small Models that don’t need GPUs to operate

Multiple projects have demonstrated that small models like Mistral, Llama 2, etc., when fine-tuned for specific use cases, perform very well compared to large models like OpenAI and Gemini.

One-Click Fine Tuning

As we worked on our Small Model capabilities, we quickly realized that there was a night and day difference between the generic versions of such models vs. even models fine tuned on hundreds or thousands of examples for a cost of about $100. But doing model fine tuning requires significant data science expertise today. So, Aible automated the process end-to-end, from fine tuning data collection, to setting the correct fine tuning parameters, to automatically doing the fine tuning, to making the fine tuned available as a serverless option in Aible. Essentially, users just have to provide feedback using thumbs up/down or editing chat responses - then once enough data has been collected, they just have to click a button.

One-Click Fine Tuning

As we worked on our Small Model capabilities, we quickly realized that there was a night and day difference between the generic versions of such models vs. even models fine tuned on hundreds or thousands of examples for a cost of about $100. But doing model fine tuning requires significant data science expertise today. So, Aible automated the process end-to-end, from fine tuning data collection, to setting the correct fine tuning parameters, to automatically doing the fine tuning, to making the fine tuned available as a serverless option in Aible. Essentially, users just have to provide feedback using thumbs up/down or editing chat responses - then once enough data has been collected, they just have to click a button.

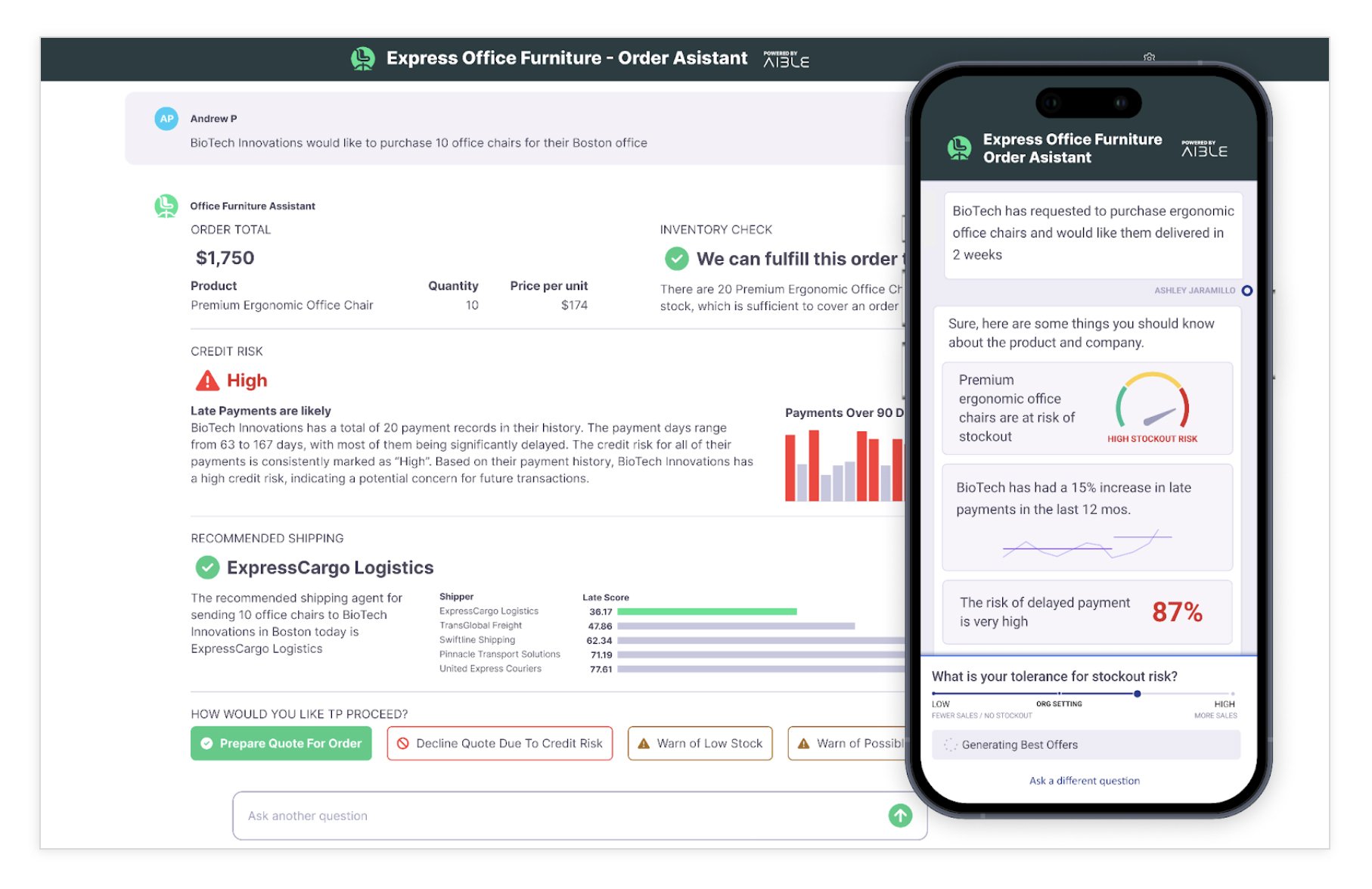

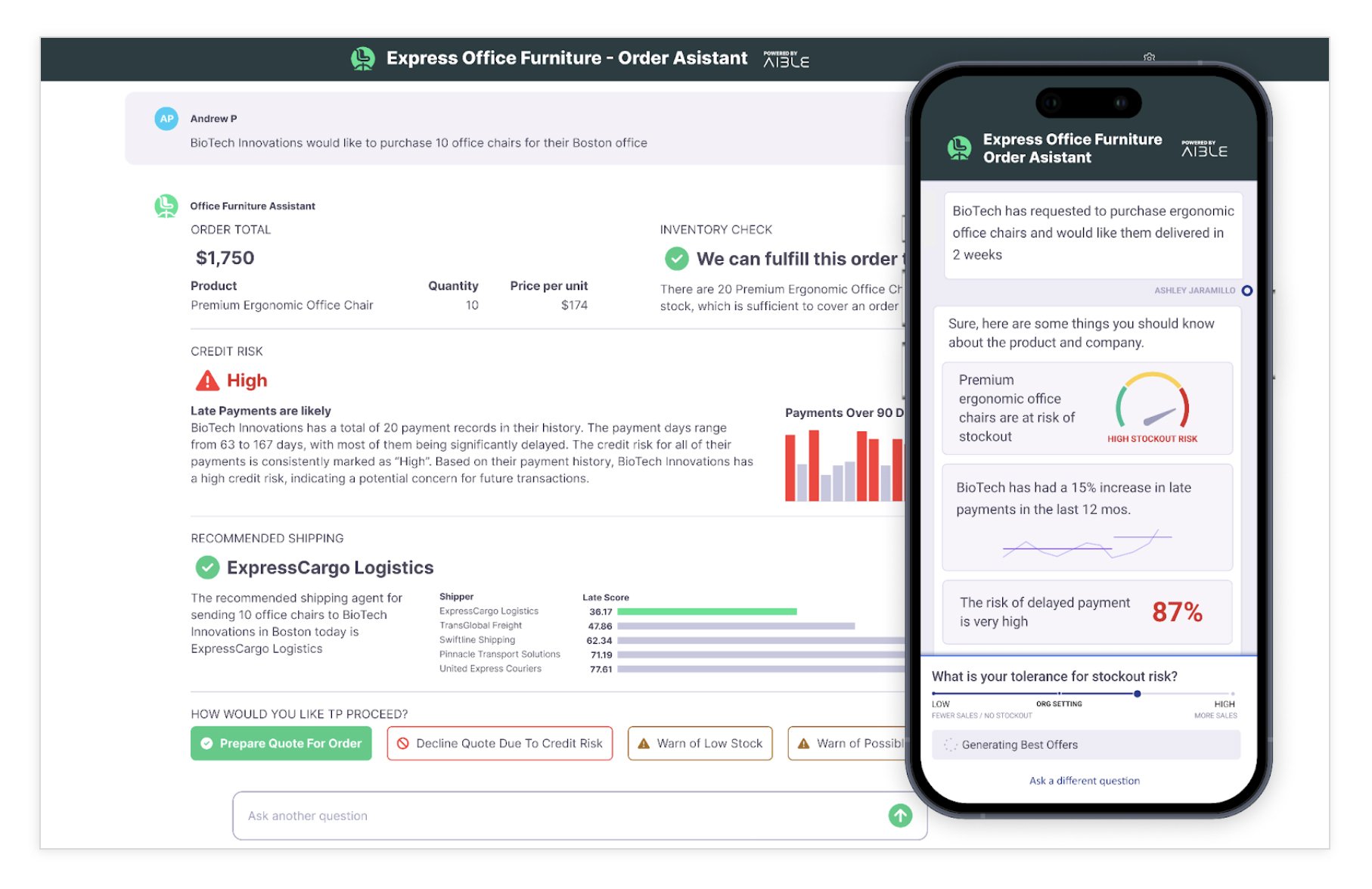

Aible Anywhere

Our enterprise customers are looking for tailored AI and analytics experiences that achieve end-to-end business process workflows, rather than monolithic or piece-meal applications. They want to transform their end-to-end processes such as Order to Cash and Procure to Pay by leveraging AI to reduce risk and friction.

Aible Anywhere

Our enterprise customers are looking for tailored AI and analytics experiences that achieve end-to-end business process workflows, rather than monolithic or piece-meal applications. They want to transform their end-to-end processes such as Order to Cash and Procure to Pay by leveraging AI to reduce risk and friction.