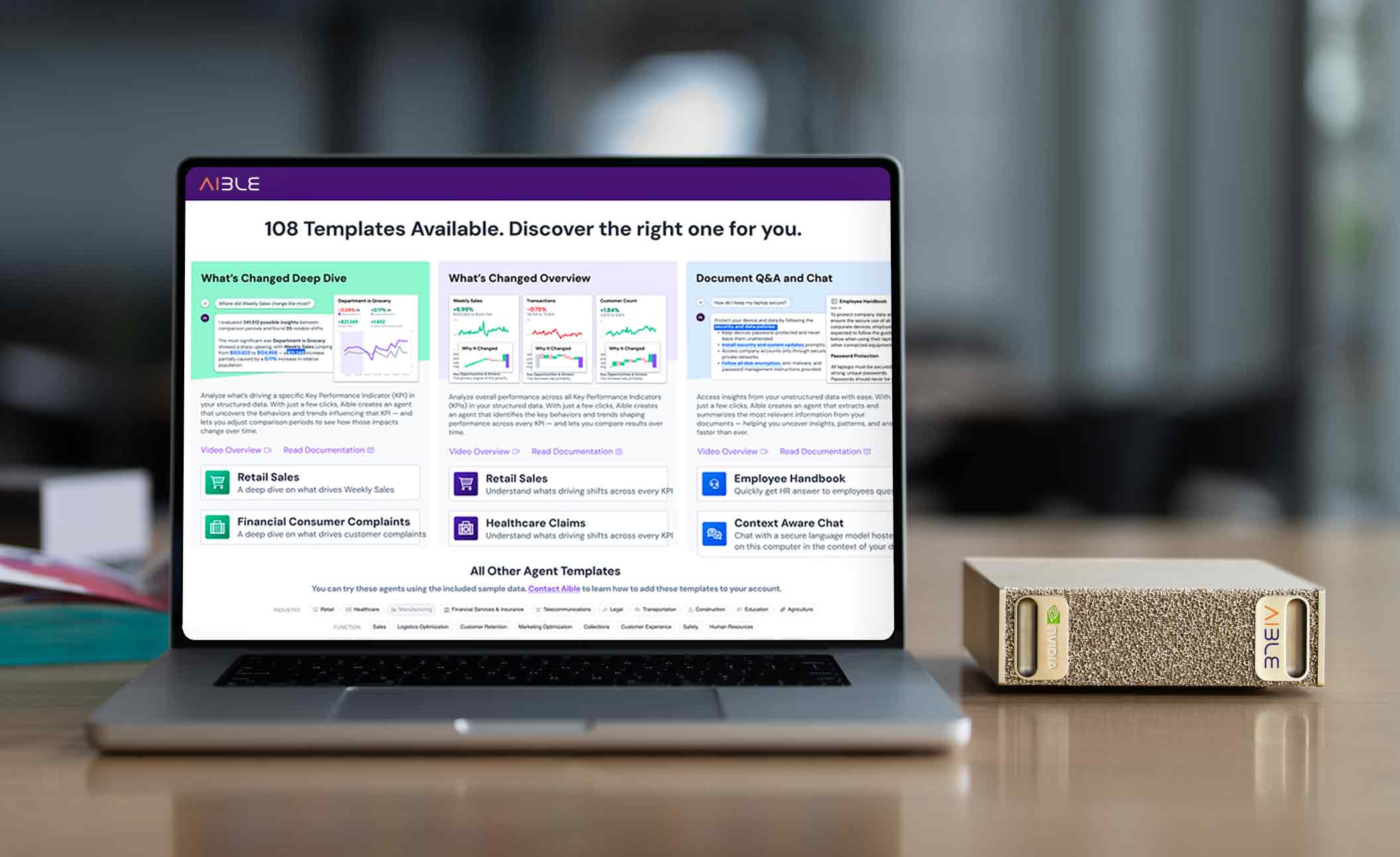

Your Own Personal AI Running on NVIDIA DGX Spark

All of Aible’s capabilities can now run on a single small desktop server in the form of the NVIDIA DGX Spark. Aible can run the agents extremely fast by running the user experience, the agent coordination, agent tools including vector databases, and the language models, all on the same silicon with shared memory and communicate synchronously between them without writing anything to disk.

Your Own Personal AI Running on NVIDIA DGX Spark

All of Aible’s capabilities can now run on a single small desktop server in the form of the NVIDIA DGX Spark. Aible can run the agents extremely fast by running the user experience, the agent coordination, agent tools including vector databases, and the language models, all on the same silicon with shared memory and communicate synchronously between them without writing anything to disk.

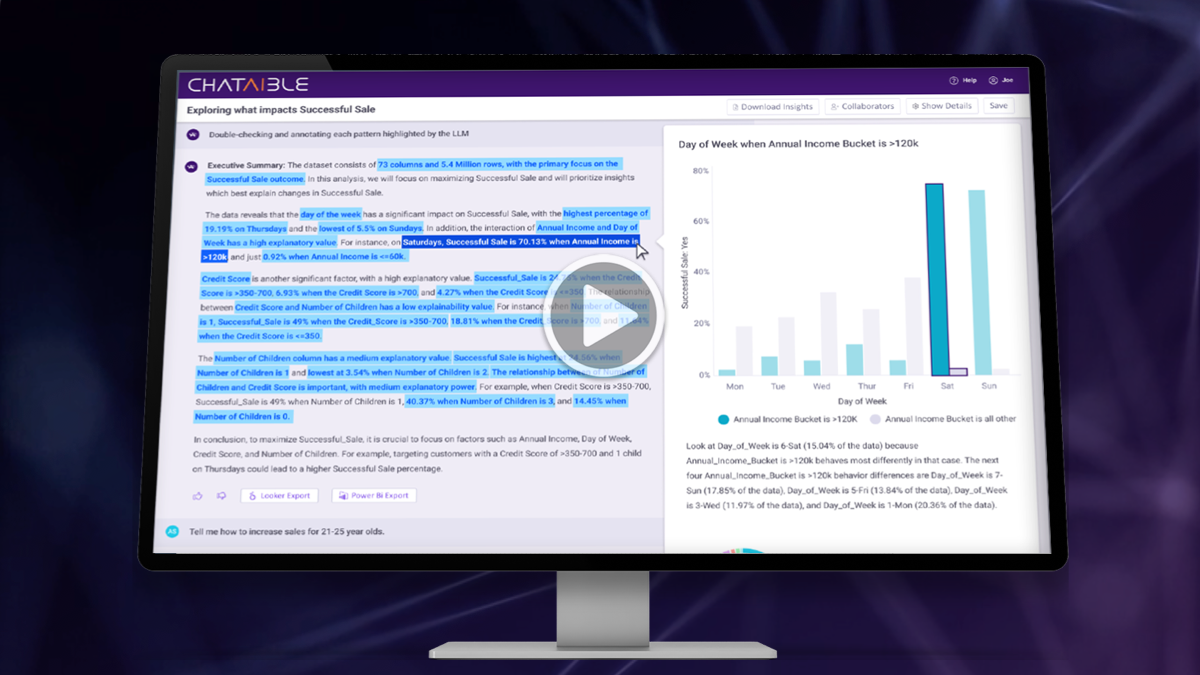

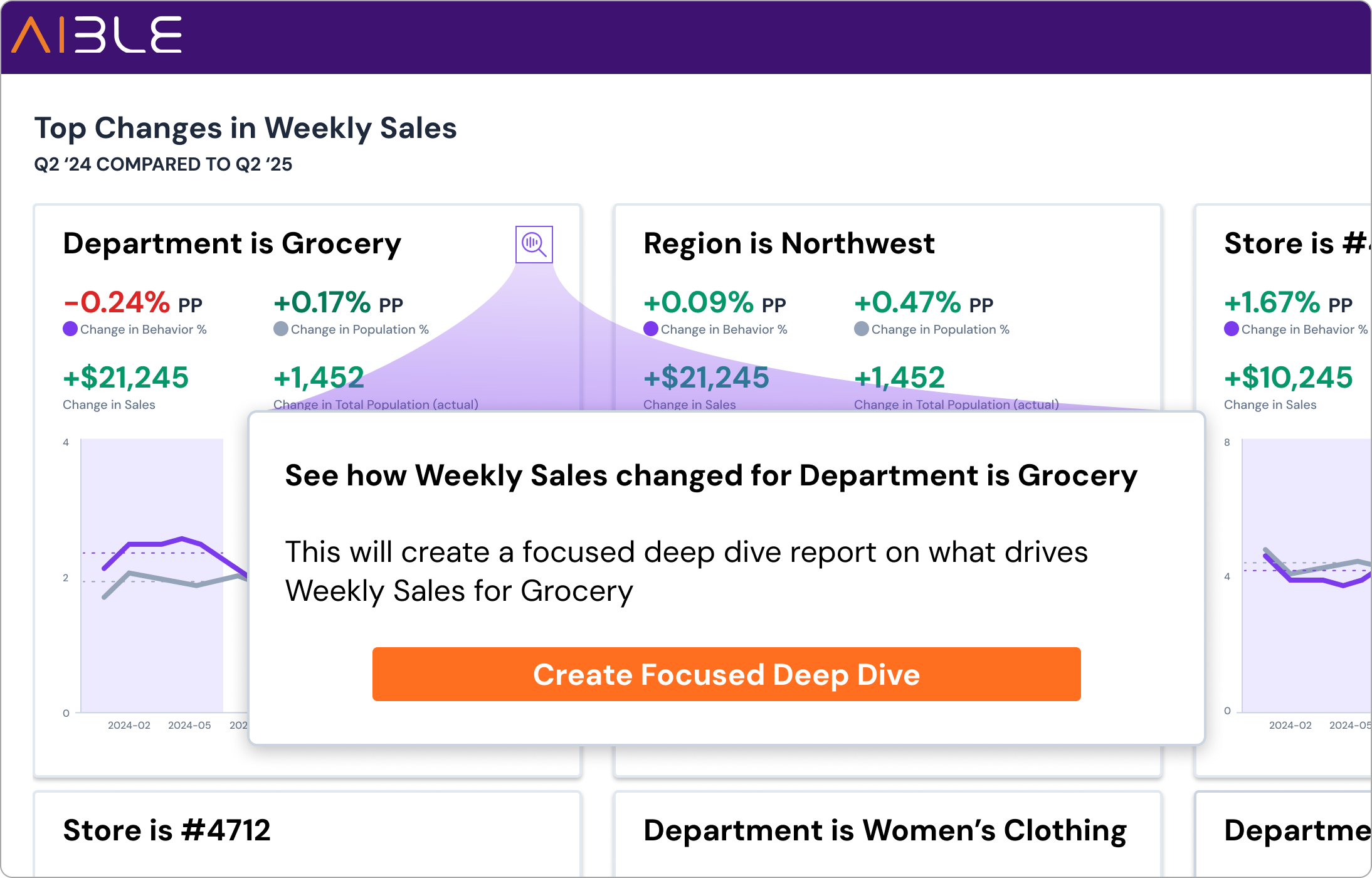

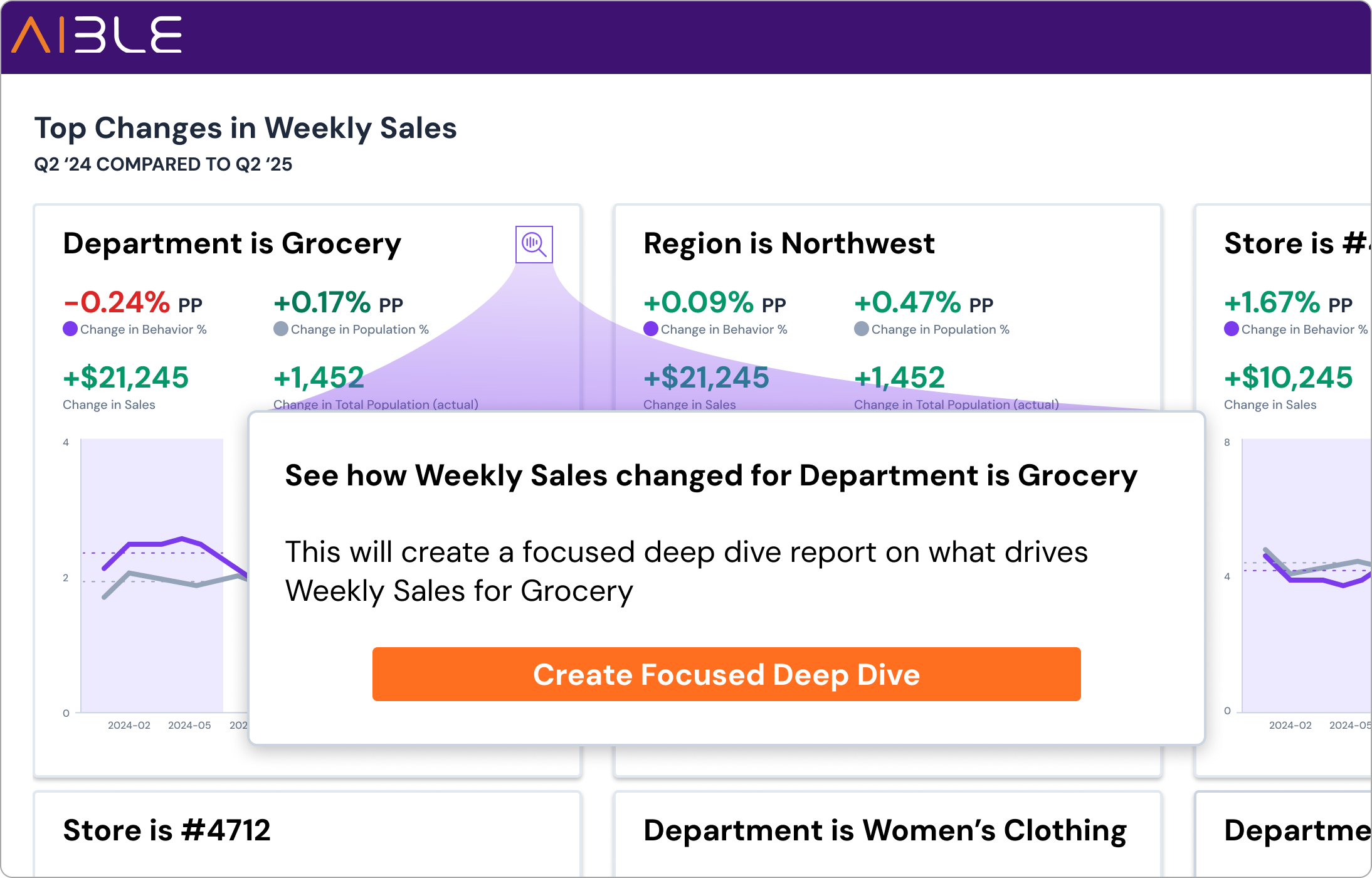

Discover Deeper Insights with Enhanced What’s Changed Focused Analysis

Customers have embraced Aible’s What’s Changed Overview and Deep Dive features for revealing how key metrics shift over time. Now, Aible takes this a step further with the new Focused Analysis, empowering users to select any insight and instantly explore every factor driving changes in that specific chart.

Additionally, you can chat with Aible to receive automated, conversational summaries of your focused insights. This seamless experience helps you concentrate on the most relevant details without the need to manually build or filter datasets. With Aible generating the precise data scope based on your questions, you can quickly uncover the underlying causes of change and take confident, data-driven action.

Discover Deeper Insights with Enhanced What’s Changed Focused Analysis

Customers have embraced Aible’s What’s Changed Overview and Deep Dive features for revealing how key metrics shift over time. Now, Aible takes this a step further with the new Focused Analysis, empowering users to select any insight and instantly explore every factor driving changes in that specific chart.

Additionally, you can chat with Aible to receive automated, conversational summaries of your focused insights. This seamless experience helps you concentrate on the most relevant details without the need to manually build or filter datasets. With Aible generating the precise data scope based on your questions, you can quickly uncover the underlying causes of change and take confident, data-driven action.

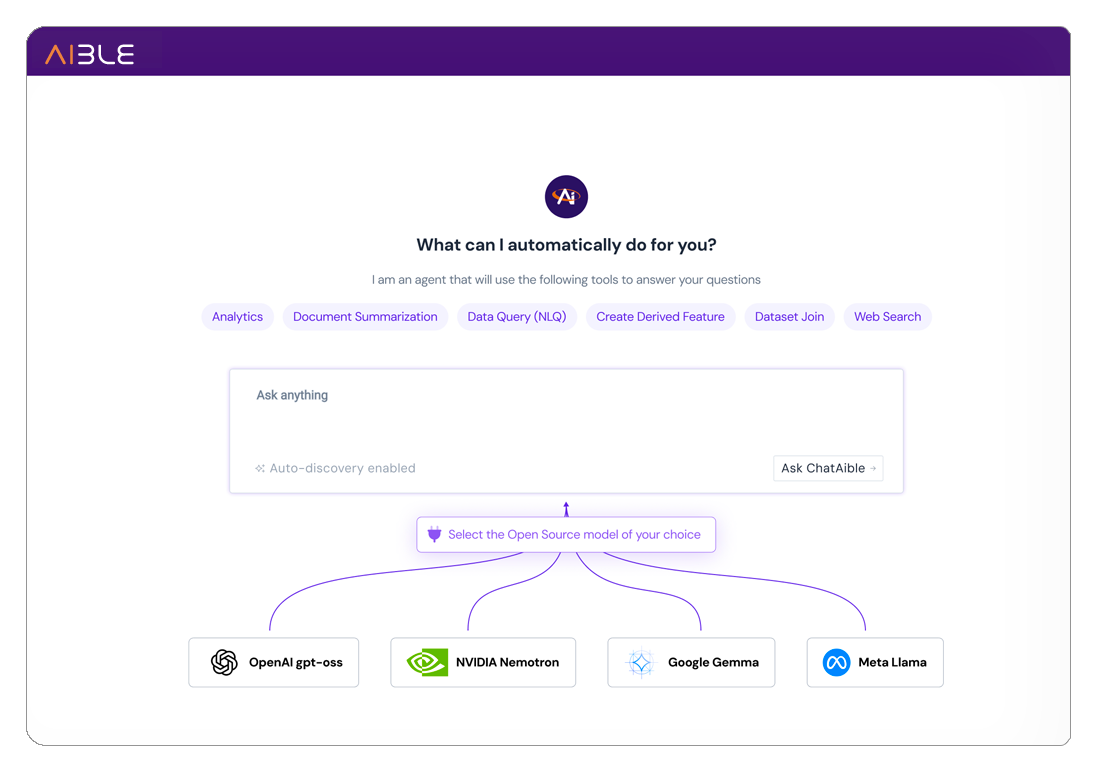

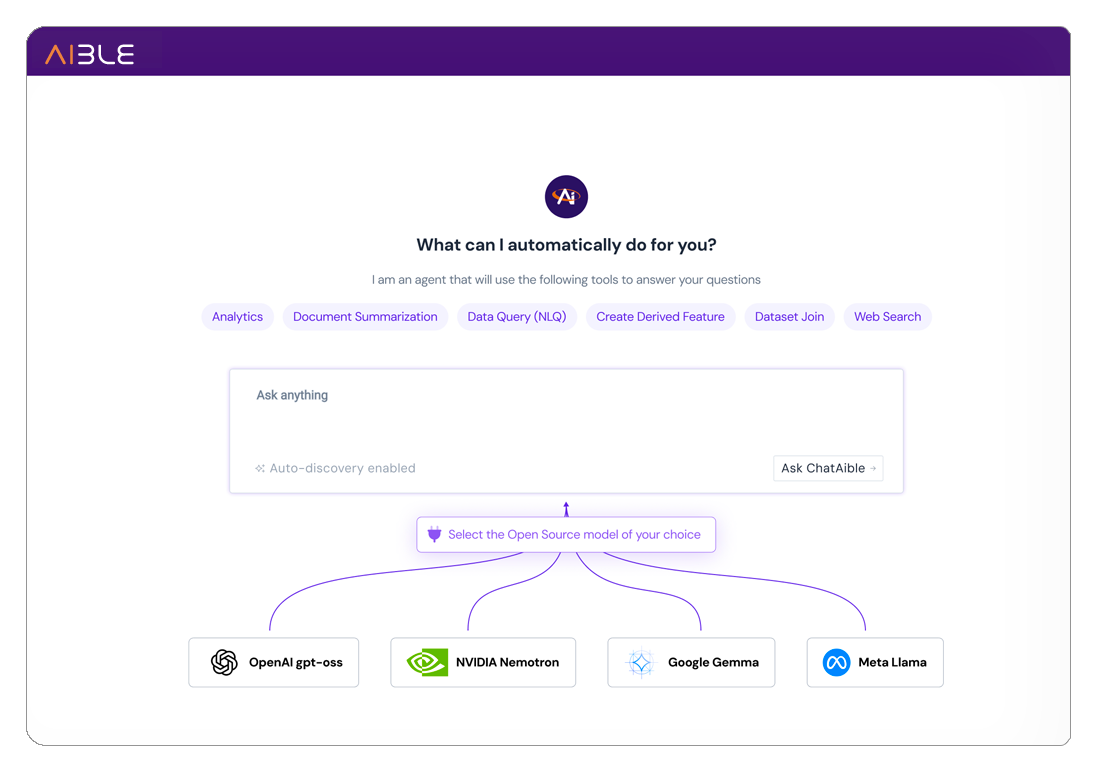

Maintain Full Control with Aible’s New Open-Source Models

Aible continues to add more open source models on NVIDIA infrastructure. In this release we added multiple Nemotron and OpenAI gpt-oss-120b models in our default model libraries. You can use these with any Aible Agents. They also run locally on the NVIDIA DGX Spark so you can use them without sending data outside your personal super computer.

Maintain Full Control with Aible’s New Open-Source Models

Aible continues to add more open source models on NVIDIA infrastructure. In this release we added multiple Nemotron and OpenAI gpt-oss-120b models in our default model libraries. You can use these with any Aible Agents. They also run locally on the NVIDIA DGX Spark so you can use them without sending data outside your personal super computer.

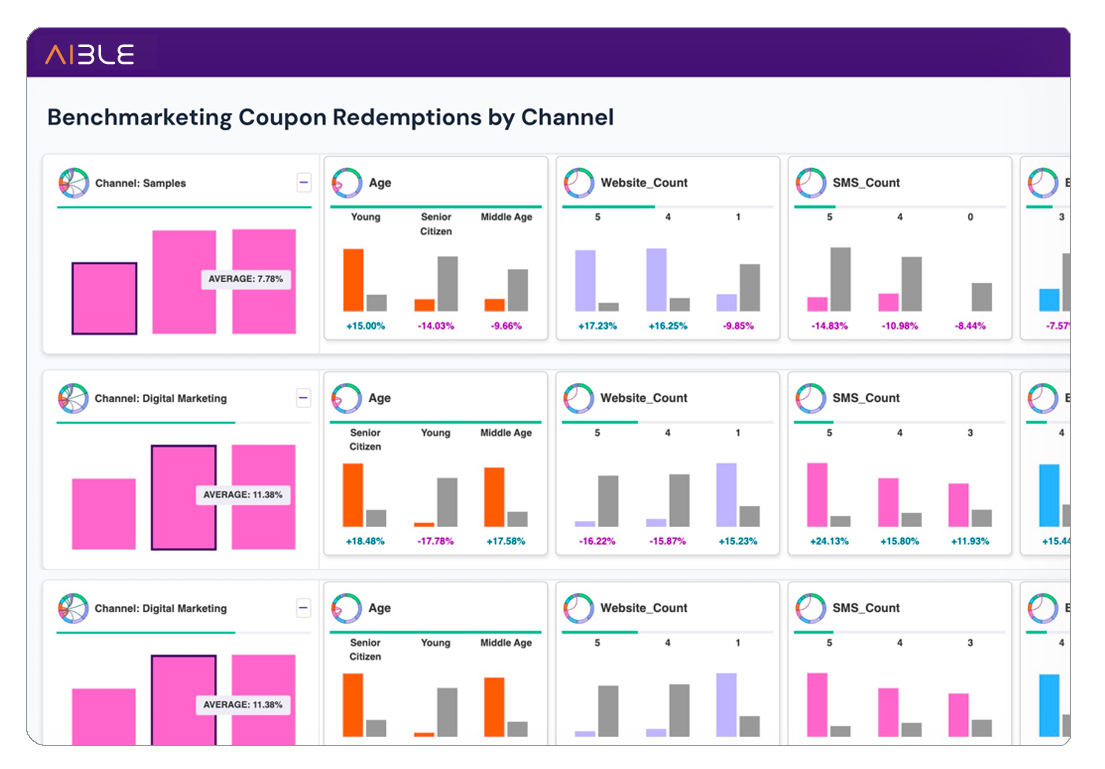

Compare Performance Instantly with Aible’s New Benchmark Analysis

Aible continues to add more templates to simplify the creation of agents. In this release we added a whole new category of agent templates called the Benchmark Analysis templates. Aible’s Benchmark Analysis agent template makes it easy to see how your business stacks up across different groups or segments (e.g. salespeople, customers, products, warehouses,...) —all in one view.

You can instantly uncover which products, regions, or customer segments are outperforming expectations and which ones are falling behind. The agent summarizes these insights clearly, helping you immediately identify areas that matter for decision-making.