Industrialized Agents at Scale

The Enterprise Problem Statement: Enterprises understand the strategic importance of AI, but may face challenges delivering meaningful wins.

At GTC 2025, Aible highlighted three key trends that are converging in the world of AI to solve these problems:- Agentic AI where an automated system performs specific tasks flexibly

- Reasoning models that explain the steps it took to arrive at its conclusion

- Converged architectures like NVIDIA Grace Hopper and NVIDIA Grace Blackwell that combine CPUs, GPUs, and memory into a single piece of silicon

There are several technology pieces at play here, so let us look at this through the lens of a specific prototype we worked on with Dr. Matthew McCarville, the CIO of the State of Nebraska, who commented, “Aible’s focus on explaining the reasoning of the model to end users and letting them provide feedback to improve the model directly is key to empowering everyone with the power of AI. We are currently working with Aible to prototype a solution where Nebraska farmers and ranchers will be able to benefit from AI without sharing their proprietary agricultural data with others. Farmers and ranchers have very unique needs but also can’t be expected to learn artificial intelligence algorithms. This approach lets them teach the AI as they would teach a new employee. I saw the power of this concept two years ago when I was one of the judges for a contest at UC Berkeley AI Summit where high schoolers using Aible beat the results of expert data scientists. We want to empower agricultural experts, who have a deep domain knowledge, to leverage AI the same way.”

1. The Importance of Specialized AI in Agentic AI:

There are many different ways farmers can benefit by adopting AI, from AI that detects plants under stress through image analysis, to AI that detects an animal in distress from the sounds it makes, to AI that continuously monitors videos to detect whether workers are wearing the appropriate safety equipment. But farmers don’t have the time to set up or work with such AI. At most the farmer may have time to click a button on a reasoning step and explain where the AI went wrong.

Also, while AI can do a very good job when specialized to specific tasks, a general- purpose model that does well on generic benchmarks may fall short on expectations. For example, the results of the Aible Intern Benchmark Project, which was also revealed at GTC 2025, demonstrated the following results when we tested general purpose models on a very specific task - in that case a SQL generation task with a corresponding custom benchmark. Note that in this case really small models like the 8 Billion parameter Llama-3.1 model performed much better than far bigger and more advanced models, once post-trained on the specific use case. While this benchmark tested at the scale of 1000 examples of feedback, we have previously shown that models can improve significantly with as little as 100 examples of feedback.

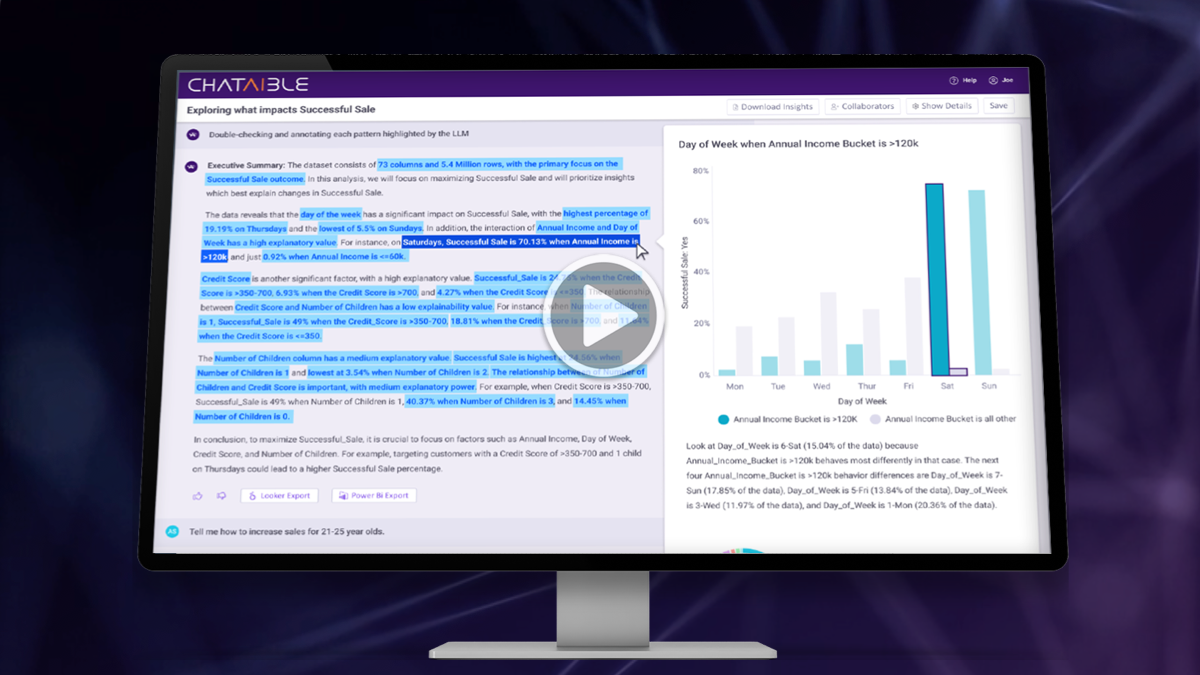

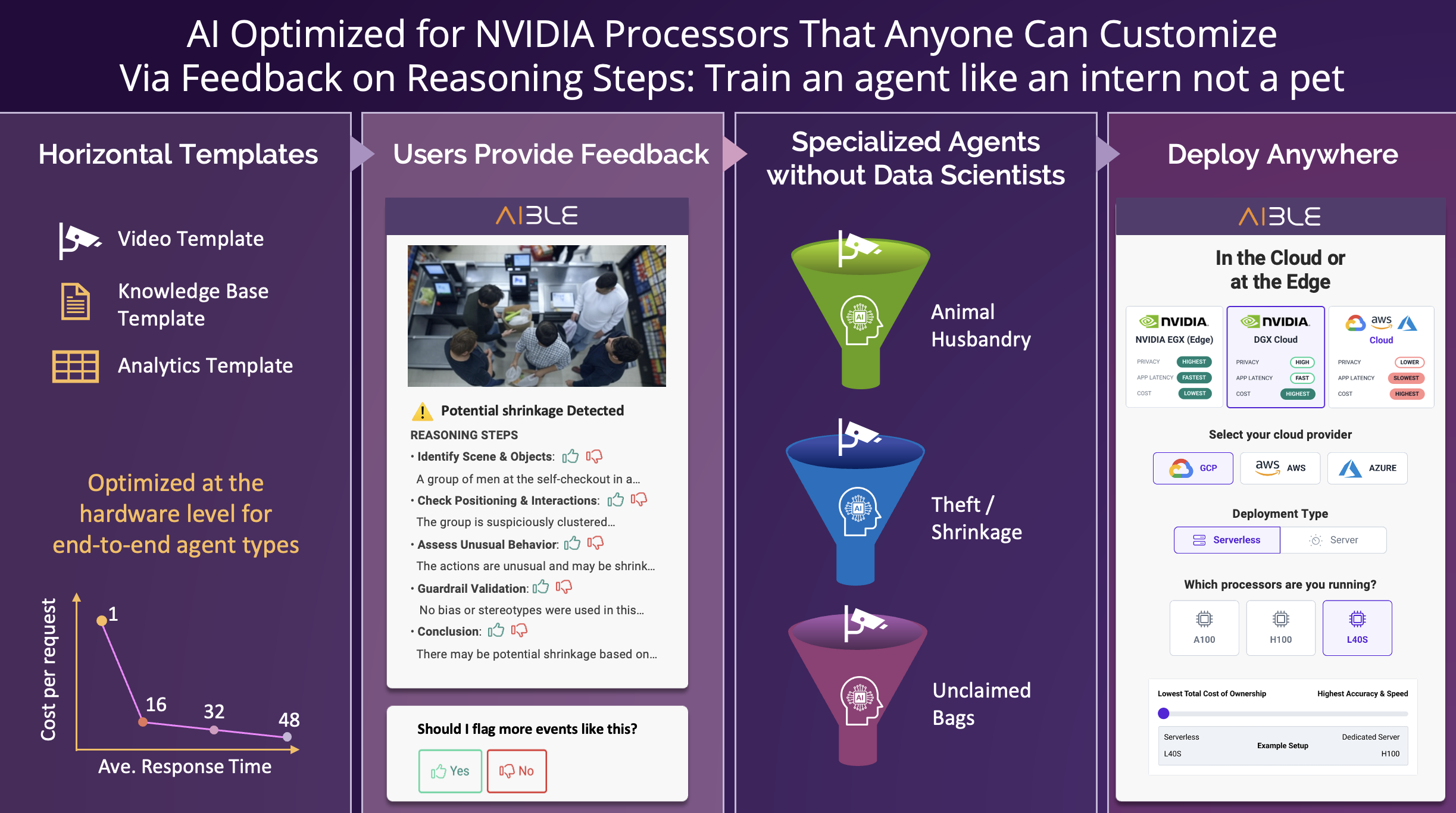

Thus, instead of having a single model that tries to perform all tasks, we created different types of templated agents - for video analytics, image analytics, data analytics, etc. and post-trained them on user feedback to create different specialized agents - each doing a specific task. Farmers could just choose the templates that made sense to them, provide feedback on the agents and make them their own.

2. How Reasoning Models Improve Agentic AI & Build Trust

As noted above, as we post-trained the models we also added the ability for the model to reason and to explain its reasoning steps to the user. In our testing with end-users we saw two key benefits from this.

First, users really appreciated being able to understand the reasoning steps of the model as that helped them trust the model. For example, in one case the model thought an image showed a person properly wearing safety equipment because the person had goggles and a hard hat on. But the person was not wearing gloves. The user was thus able to quickly see where the model went wrong.

Second, users could provide precise feedback - in this case commenting on the specific reasoning step that the AI should have also looked for gloves. This gave the system more context that enabled it to automatically improve the AI. If the user had only provided a thumbs down on the image, there may have been many reasons behind why they provided such negative feedback and it would be harder to improve the AI based on that information. Also, subjectively users felt a far greater degree of control when they could explain to the AI exactly what it got wrong, see it retry based on that clarification, and get it right. Essentially this is a closed-loop feedback cycle where the user immediately sees the improvement to the AI based on their feedback. Of course this feedback is also collectively used to post-train the AI and potentially use smaller models to achieve higher accuracy.

3. How Converged Architectures Accelerate Agentic AIWe believed that NVIDIA’s design of superchips which combine CPUs and GPUs coherently over a high speed interface would accelerate the agents significantly. To test this, we placed the entire Aible stack, from the user interface to the mechanisms for Retrieval Augmented Generation (RAG) for structured & unstructured data, model coordination capabilities and automated post-training capabilities, all on the Grace CPU. We split the Hopper GPU part using techniques like MIG to run multiple models needed by the agent at the same time.

The results were outstanding. Even with a very simple agent with just two models and three steps, the superchip was more than twice as fast as running the agent on a typical cloud architecture with the different models running optimally on different servers. This is because the Agent management code in the cloud has to work asynchronously with each of the models underlying the agent, while on the superchip the coordination can be synchronous. Moreover, because we knew the precise performance characteristics of each individual model and could control their relative performance based on how we allocated the GPU resources, what concurrency settings we used for each model, etc. we could optimize the agent for end-to-end performance. This is similar to optimizing a car manufacturing line based on how long it takes to build a specific car from start to finish, or optimizing it for maximum throughput (the total number of cars produced per day).

The results were outstanding. Even with a very simple agent with just two models and three steps, the superchip was more than twice as fast as running the agent on a typical cloud architecture with the different models running optimally on different servers. This is because the Agent management code in the cloud has to work asynchronously with each of the models underlying the agent, while on the superchip the coordination can be synchronous. Moreover, because we knew the precise performance characteristics of each individual model and could control their relative performance based on how we allocated the GPU resources, what concurrency settings we used for each model, etc. we could optimize the agent for end-to-end performance. This is similar to optimizing a car manufacturing line based on how long it takes to build a specific car from start to finish, or optimizing it for maximum throughput (the total number of cars produced per day).

Notably, an NVIDIA H100 system that has a relatively similar GPU to the superchip and a separate CPU on a single server, performed about 41% slower compared to the superchip (5.87 seconds vs. 4.16 seconds). We believe this was due primarily to the benefits of the CPU/GPU superchip architecture.

Note that the performance gains from the converged architecture pairs well with the fact that smaller specialized models perform better than much larger generalized models. The smaller the model, the more of them we can fit into a single superchip. Of course, the superchips can also be stacked together to effectively create larger superchips that can hold more models.

4. Putting it All Together: Aible’s Unique Five Step ApproachThe key problem with the agentic future is the shortage of experts who can create the custom agents that can have maximum impact in the enterprise. The Aible Intern approach, highlighted at GTC 2025, solves this at scale.

- Templatize: Aible offers horizontal templates such as Natural Language Querying or Image Analytics. These templates serve as starting points for creating vertical, use-case-specific agent templates like Sales Analytics or Retail Theft Detection. These initial templates are referred to as ‘Aible Interns’, because they do not yet have use-case or enterprise-specific context that specializes them into user-specialized Agents.

- Optimize: Each template is optimized at the hardware level for different server and superchip configurations. For example, a video analytics template would need a very different set of models configured in a very different way than an augmented analytics template.

- Customize: Users can make the Intern their own by providing feedback on its chain of reasoning or final output. Absolutely no data science skills or IT skills are required to enable this. All the user needs is domain knowledge necessary to provide the Intern feedback on what it is doing right or wrong.

- Deploy: Agents can be securely deployed anywhere - on a superchip running at the edge in a farm without Internet access (air-gapped), on NVIDIA DGX Cloud Serverless Inference leveraging NVIDIA Cloud Functions (NVCF) to scale across multiple regions and clouds. The code and the user experience is exactly the same, and the agent always securely runs under the enterprise’s own control without exposing any data outside the enterprise. The performance and operating cost would of course vary depending on whether the agent was deployed on a superchip, a server, or serverless.

- Maintain: Companies have struggled with maintaining their agents because the underlying technology has been evolving so fast. Because every Aible agent is derived from a specific Aible Intern template, as underlying technology such as Language Models evolve, we can update each template as appropriate. This way all agents derived from the Intern templates can be improved as the technology evolves.

Agentic AI is here. And today any business user can create their own custom agents - optimized end-to-end from silicon to models to UI - without needing to know any data science. They are Aible!

Attendees of GTC 2025 can sign up to get involved in the Aible Intern Project at www.aible.com.